Studio Subtitling

By Trados AppStore Team

Free

Description

The Problem this app addresses

The volume of audio visual content for localization is growing rapidly. Turnaround times are getting shorter and many of those working in the industry are feeling increased price pressures in dealing with this sort of content. Translation tools today lack proper context for subtitlers, offering poor support for the variety of file formats.

The Solution this app provides

The Studio Subtitling plugin supports enhanced features for audio visual translation, editing, proofing and works synchronously with the Studio editor in support of the following filetypes:

- ASS (available here on the RWS AppStore)

- SRT (supported out of the box in Trados Studio 2021/2022/2024)

- webVTT (supported out of the box in Trados Studio 2021/2022/2024)

- STL (available here on the RWS AppStore)

- SBV (supported out of the box in Trados Studio 2021/2022/2024)

- TTML (supported out of the box in Trados Studio 2021/2022/2024)

NOTE:

- The plugin does not work with single document projects, resulting in errors. To use it properly, you need to create standard projects.

- It is not possible to use the numpad for non-subtitling files if this plugin is installed. This is a limitation of Studio. The workaround is to remove the keyboard shortcuts for numpad in the Studio options. They can be reset when needed by using "Reset to Defaults" in the Subtitling Keyboard shortcut list.

Click here to download the TQA model for Trados Studio that is also supported by this plugin.

Technical details

4.0.0.3 - Trados Studio 2024

Changelog:

- Updated to Studio 2024

- Fully implemented TellMe features, including the subtitle File Types managed by the AppStore team (*.ass; *.stl; *.webvtt)

Checksum: 679892f76b013eeca8c92c925a8d3b8976a296902863132f15dc19d91b3dc45b

Release date: 2024-06-25

3.1.4.0 - Trados Studio (2022 (SR2), 2022 (SR1))

Changelog:

- Set logs file path to the correct location "Trados AppStore"

Checksum: 5a91719327f9f2136104f0d063eb84980d97d8a9bbf7006e6f0cf3ebaabd9228

Release date: 2023-11-30

3.1.3.0 - Trados Studio 2022 (SR1)

Changelog:

Force software acceleration to resolve threading related issues on the audio wave form control

Checksum: e0cb668dbd6d24e77118d64777152c09c424ebb32d6aa8e3cad620693af17677

Release date: 2023-09-12

3.0.2.1 - Trados Studio 2022

Changelog:

- Corrected updated plugin manifest to ensure that the plugin will not attempt to install into Trados Studio 2022 SR1. This is important because the SR1 release contains breaking changes that will cause this version of the plugin to prevent Studio from starting. There will be a further update of this plugin specifically for the 2022 SR1 release either alongside, or shortly after SR1 is made publicly available.

Checksum: 185cf15a76cb85e19d51c596d448aea4fd2c27db83b8f091ce5d649078fc0ba8

Release date: 2023-06-06

2.2.5.0 - Trados Studio 2021

Changelog:

- Updated manifest information: maxversion & author

- Updated project dependencies

Checksum: 63e7864f3613a90e9ea458bb96075ec7306e148964e2ea6d71533c44c224dc2d

Release date: 2022-04-17

1.2.4.0 - SDL Trados Studio 2019

Changelog:

- Resolved divide by zero exception when attempting to calculate the video encoding

Checksum: 9c05ee6c210fb04acf669ca149a56e9b47fec7abecff648aa34777d2ddd3dc54

Release date: 2021-08-09

Support website: https://community.rws.com/product-groups/trados-portfolio/rws-appstore/f/rws-appstore

Shared products:

Trados Studio 2024

4.0.0.3

- Updated to Studio 2024

- Fully implemented TellMe features, including the subtitle File Types managed by the AppStore team (*.ass; *.stl; *.webvtt)

Trados Studio (2022 (SR2), 2022 (SR1))

3.1.4.0

- Set logs file path to the correct location "Trados AppStore"

Trados Studio 2022 (SR1)

3.1.3.0

Force software acceleration to resolve threading related issues on the audio wave form control

Trados Studio 2022

3.0.2.1

- Corrected updated plugin manifest to ensure that the plugin will not attempt to install into Trados Studio 2022 SR1. This is important because the SR1 release contains breaking changes that will cause this version of the plugin to prevent Studio from starting. There will be a further update of this plugin specifically for the 2022 SR1 release either alongside, or shortly after SR1 is made publicly available.

Trados Studio 2021

2.2.5.0

- Updated manifest information: maxversion & author

- Updated project dependencies

SDL Trados Studio 2019

1.2.4.0

- Resolved divide by zero exception when attempting to calculate the video encoding

Overview

Studio Subtitling is a plugin for Trados Studio 2019-SR2 and higher that provides features for previewing subtitle captions within the video while translating segments in the Editor. This includes a verification provider with specific QA checks for validating subtitle content, ensuring the translations provided adhere to the standards agreed upon.

As we move forward, we will start to extend our portfolio in supporting additional subtitle formats.

The current release includes file type support for (SubStation Alpha) .ass (SubRip).srt, (WebVTT).vtt,(YouTube).sbv and (Spruce Subtitle file).stl formats.

Trados Studio 2019 SR2+ and Trados Studio 2021 support the following formats ‘out of the box’-.srt .vtt .sbv and .sub.

There may however be certain scenarios, where you are advised to install the separate plugin for a better experience.

If you are working with .stl, and /or .ass/ssa file types, you will need to install the relevant plugin.

In addition, we also released a new TQA model generated from the FAR model, covering the primary requirements in a providing a functional approach to assessing quality for subtitle formats, using the integrated Translation Quality Assessment feature of Studio.

Technical Requirements

We recommend Windows 8.1 (preferably higher) and SDL Trados Studio 2019 SR2 as a minimum.

Setup

Studio Subtitling Plugin

- Download the Studio Subtitling plugin to your local driveDouble click on the plugin to launch the Plugin Installer and complete the installation, selecting the supported versions of Trados Studio.

File Type Plugins

Download the required File Type plugins to your local drive

Double click on each of the plugins to launch the Plugin Installer and complete the installation, selecting the supported versions of SDL Studio.

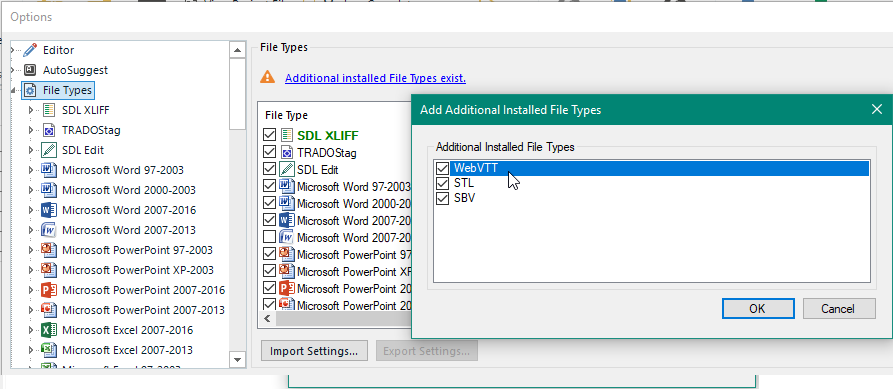

Complete the installation of the File types from within the Studio Options

- Launch Studio.Select: File → Options → File TypesClick on the 'Additional installed File Types exist' linkCheck the checkboxs associated with each of the File Types that you want installed from the Add Additional Installed File Types dialogClick OK to save the settings

Subtitling Preview & Data controls

The Subtitling preview and data controls are available for valid subtitle documents. The Preview control provides a real-time preview of the subtitles within the video, whereas the Data control displays the subtitle metadata for each of the segments, along with real time verification feedback as the linguist is providing translations in the editor.

Configuration

Both the preview and data controls can be displayed and positioned anywhere within the Editor view.

To display the preview or data control:

- Open a subtitle document in the EditorSelect the Ribbon viewSelect the option Subtitling Preview or Subtitling Data from the ‘Information’ groupReposition the control, docking or pinning it in the preferred location.

Features

Synchronized selection and content changes

The selected segment from the editor is synchronized with the subtitle track that is displayed in the video. When the user moves to a new segment in the editor, the corresponding track is selected in the video and similarly when the user selects a subtitle track in the video, focus is moved to the corresponding segment in the editor.

Additionally, changes applied to the translation are immediately visible in the subtitle caption from the video, including any formatting that was applied (e.g. bold, italic, underline…).

Real time verification feedback

Displays real time verification feedback as the content is being updated, providing the linguist with a much more informed approach in making decisions as they are translating.

Splitting & Merging segments

Support for splitting and merging segments within and across paragraph boundaries. When merging across paragraphs, all context and structure related to the paragraphs that have been fully merged to the parent paragraph are excluded from the native file that is regenerated with the target content.

Merged and Virtually merged files

Support for working with both merged and virtually merged files. Ideally each subtitle file that is added to the project should have a corresponding video reference with the same name (with exception to the file extension). Each time the user navigates to a new file within the document, the relative video is loaded (if available) and corresponding track in the video is selected.

Editable time tracks

Support for updating the display time of the subtitle captions. This feature permits a linguist to adapt the Start and End time-codes to align better with the translated content. This also includes hotkeys to automatically set the Start/End times from the current position of the video.

Time-code Format

Support for converting and displaying the time-code format between Frames and Milliseconds.

Setting a minimum time span between subtitles

Support for applying the rules of a styleguide to set a minimum time span between subtitles.

Keyboard Shortcuts

Support for assigning to help automate interaction with keyboard shortcuts the subtitling controls from the editor (e.g. jump back/forward from the video in seconds or frames, link/unlink the video, play back from previous subtitle etc...)

-

Usage

Pre-requisite

- Install the Studio Subtitling and File Types plugins, following the steps listed in Setup.

Add a video reference

- Launch the project wizard.Add a subtitle document that is supported by one of the File Types installed.Decide whether you want to add a video reference for the subtitle document during project creation or at a later stage from the Editor.Add a video reference during project creationThe file name of the video should match that of the subtitle document, with exception to the extension.The video will be a physical resource in the project, meaning that it will be included in the package. Keep this mind as video files can be quite large in size.Don't add a video reference during project creationThe video reference can be added at a later stage when the document is opened in the Editor.This option is more appropriate for distribution, keeping the overall size of the project small, while still allowing the linguist to download and add a reference later if required.Click on Finish to complete the project creation.Open the subtitle document for translation in the Editor.If the Subtitling Preview or Subtitling Data controls are not visible in the Editor, then follow the steps listed in the Configuration.If a video reference was not provided during the project creation, then a default image will be displayed in its place. To load a video reference that coincides with the subtitles in the document, browse and select the media from the settings window of the plugin.Once the video reference is loaded, the video will be displayed and subtitles will synchronize with the segments in the document.

Note: The area of the video in the Subtitling Preview control will adapt automatically to the aspect ratio of the video

Note: The area of the video in the Subtitling Preview control will adapt automatically to the aspect ratio of the video

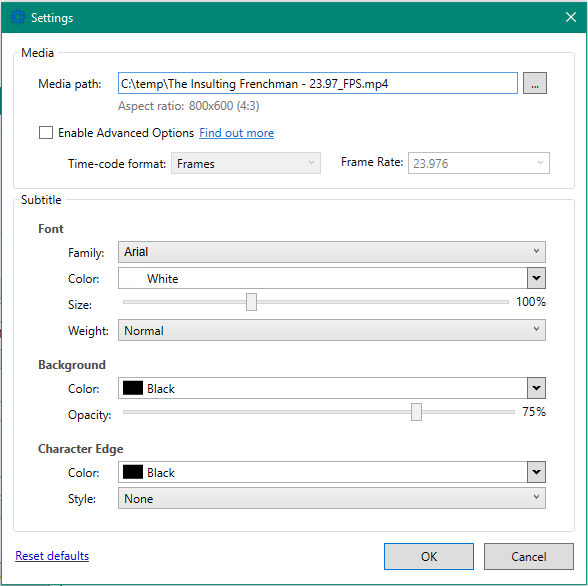

Options

- Go to the Subtitling Preview control.Click on Settings to customize.Video.Video pathTime-code format (Milliseconds or Frames)Frame RateSubtitleFont, color, wight, and size.Background color and opacity.Character edge color and style.

These will be your default captions format settings until you change them again or click Reset to go back to the default captions format.

Time-code format

The time-code format is always displayed in milliseconds from the Subtitle Preview control. It is read as milliseconds, unless the subtitle document has information to suggest otherwise. In the case that the format should be read as Frames, select the option 'Frames' from Time-code combobox in the Subtitle Preview Options dialog.

To successfully switch the Time-code format from Milliseconds to Frames:

- The frame rate of the video must be known.

- The fractional seconds (to be identified as frames) cannot exceed the frame rate for any of the time code entries for each of the segments in the document.

- Make reference to Time-code Conversion to understand more.

Time-code Conversion

- How to calculate the position in milliseconds, given the frame rate and frame:

Milliseconds Per Frame = 1000 / Frame Rate

Frames To Milliseconds = Frame * Milliseconds Per Frame

Given:- Frame Rate = 24- Frame = 4Result: Milliseconds Per Frame = 41.666 (1000 / Frame Rate) Frame To Milliseconds = 166.666 (Frame * Milliseconds Per Frame)

- How to calculate the position in frames, given the frame rate and milliseconds

Milliseconds Per Frame = 1000 / Frame Rate

Milliseconds To Frame = Milliseconds / Milliseconds Per Frame

Given:- Frame Rate = 24- Milliseconds = 166.666 Result: Milliseconds Per Frame = 41.666 (1000 / Frame Rate) Milliseconds To Frame = 4 (Milliseconds / Milliseconds Per Frame)

Valid Subtitle Document

Any document whose paragraphs have a context of type=sdl:section, code=sec, and obtain a meta data entry with a key named timeStamp , whose value conforms to the following time span format

Time span format: hh:mm:ss[.fffffff]

"hh"The number of hours in the time interval, ranging from 0 to 23."mm"The number of minutes in the time interval, ranging from 0 to 59."ss"The number of seconds in the time interval, ranging from 0 to 59."fffffff"Fractional seconds in the time interval. This element is omitted if the time interval does not include fractional seconds. If present, fractional seconds are always expressed using seven decimal digits.

Verification Settings

We have integrated a specific set of verification checks for working with Subtitle documents. You can specify these verification settings for your project, along with the existing verification tools (e.g. QA Checker 3.0, Tag Verifier and Terminology Verifier)

Procedure

- Go to the Projects view and select the project.Go to the Home tab, and select Project Settings from the Ribbon.Select: Verification → Studio Subtitling → Verification SettingsConfigure other project settings if necessary and select OK.

QA Checks

CPS

Number of characters per secondThe number of characters per second

Default settings:

Report when less than: 12 (warning)

Report when greater than: 15 (warning)

Calculation:

Total Seconds = (End time - Start time) → Seconds

Characters Per Second = Total Characters / Total SecondsWPM

Number of words per minuteBased on the recommended rate of 160-180 words per minute, you should aim to leave a subtitle on screen for a minimum period of around 3 words per second or 0.3 seconds per word (e.g. 1.2 seconds for a 4-word subtitle). However, timings are ultimately an editorial decision that depends on other considerations, such as the speed of speech, text editing and shot synchronization.

Default settings:

Report when less than: 160 (warning)

Report when greater than: 180 (warning)

Calculation:

Total Seconds = (End time - Start time) → Seconds

Words Per Second = Words / Total Seconds

Words Per Minute = Words Per Second * 60

CPL

Number of characters per lineThe number of characters per line

Default settings:

Report when greater than: 39 (warning)

Method:

Returns the total number of characters for each line in the subtitle caption.

Report when any of the lines contain a number of characters greater than CPL setting assigned by the user.

LPS

Number of lines per subtitle

A maximum subtitle length of two lines is recommended. Anything greater than that should be used if the linguist is confident that no important picture information will be obscured. When deciding between one long line or two short ones, consider line breaks, number of words, pace of speech and the image.

Default settings:

Report when greater than: 2 (warning)

Character Count

Spaces and punctuation are counted in all character counts

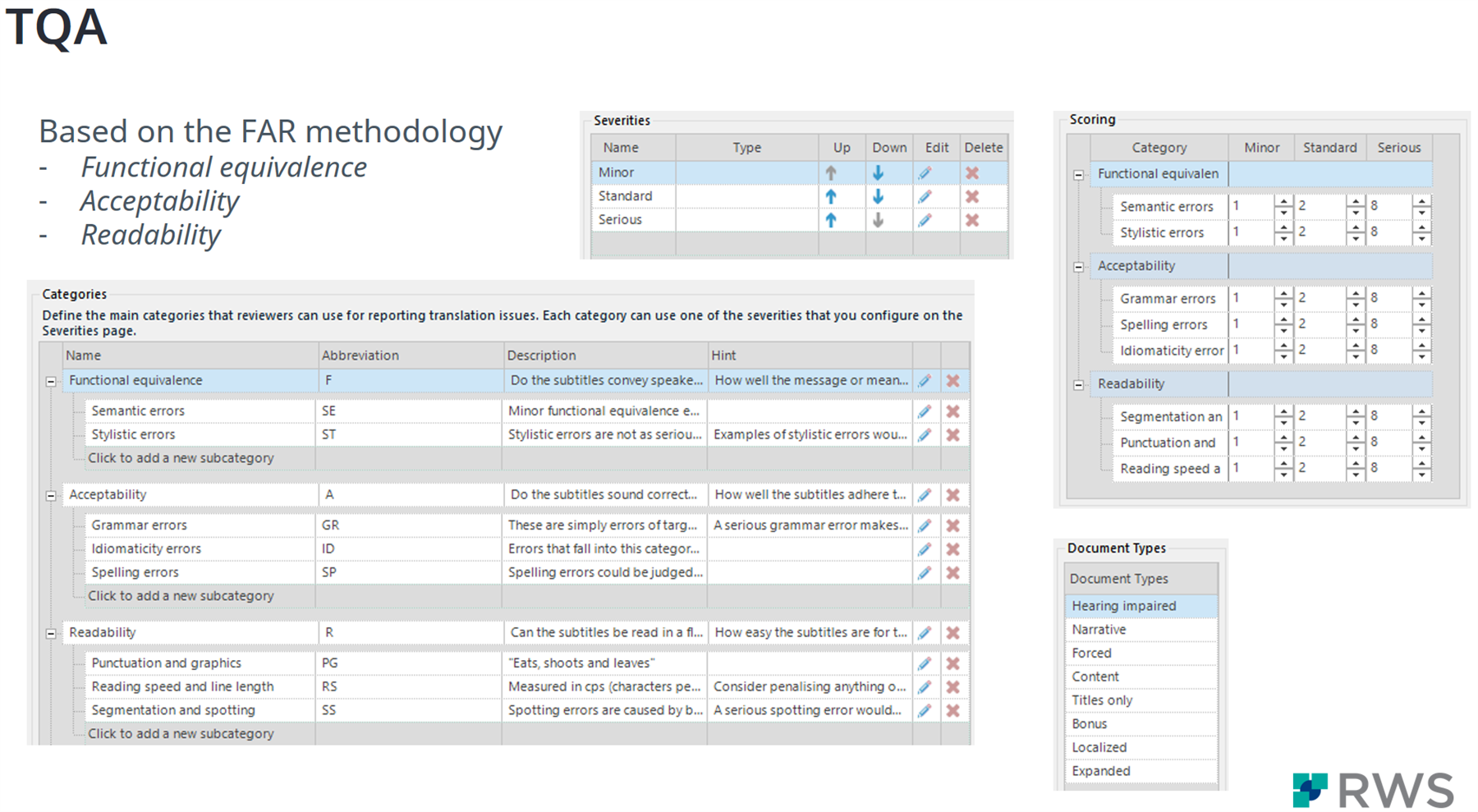

TQA

TQA can be seen as a functional approach to measuring quality in the translated content and from that assessment, evaluate and improve the process. We are introducing a new TQA model generated from the FAR model, covering the primary requirements in a providing a functional approach to assessing quality for subtitle formats, using the integrated Translation Quality Assessment feature of Studio.

FAR Model

Functional equivalence*FDo the subtitles convey speaker meaning?How well the message or meaning is rendered in the subtitled translation.Semantic errorsSEThe definition of a standard semantic equivalence error would be a subtitle that contains errors, but still has bearing on the actual meaning and does not seriously hamper the viewers’ progress beyond that single subtitle. Standard semantic errors would also be cases where utterances that are important to the plot are left unsubtitled.

A serious semantic equivalence error scores 2 penalty points and is defined as a subtitle that is so erroneous that it makes the viewers’ understanding of the subtitle nil and would hamper the viewers’ progress beyond that subtitle, either by leading to plot misunderstandings or by being so serious as to disturb the contract of illusion for more than just one subtitle.

Minor functional equivalence errors are basically lexical errors, including terminology errors which do not affect the plot of the film.Stylistic errorsSTStylistic errors are not as serious as semantic errors, as they cause nuisance, rather than misunderstandings.

Examples of stylistic errors would be erroneous terms of address, using the wrong register (too high or too low) or any other use of language that is out of tune with the style of the original (e.g. using modern language in historic films).

Acceptability*ADo the subtitles sound correct and natural in the target language?How well the subtitles adhere to target language norms.Grammar errorsGRThese are simply errors of target language grammar in various forms.

A serious grammar error makes the subtitle hard to read and/or comprehend. Minor errors are the pet peeves that annoy purists (e.g. misusing ‘whom’ in English). Standard errors fall in between.

Idiomaticity errorsIDErrors that fall into this category are not grammar errors, but errors which sound unnatural in the target language.

It should be pointed out that sometimes source text interference can become so serious that it becomes an equivalence issue

Spelling errorsSPSpelling errors could be judged according to gravity in the following way:

- A minor error is any spelling error.

- Standard errors change the meaning of the word.

- Serious errors would make a word impossible to read.

Readability*RCan the subtitles be read in a fluent and non-intrusive way?How easy the subtitles are for the viewer to process.Punctuation and graphicsPG"Eats, shoots and leaves"Reading speed and line lengthRSConsider penalizing anything over 15 cps and up to 20 cps as a standard error.

Above that is serious as you wouldn't have time to do anything apart from read the subtitles, and possibly not even finish that.

Measured in cps (characters per second)Segmentation and spottingSSSpotting errors are caused by bad synchronization with speech, (subtitles appear too soon or disappear later than the permitted lag on out-times) or image (subtitles do not respect hard cuts).

Segmentation errors are when the semantic or syntactic structure of the message is not respected.

A serious spotting error would be when subtitles are out of sync by more than one utterance. A minor spotting error would be less than a second off, and a standard error in between these two extremes.

Subtitle File Type Support

NameExtensionDefault time-code formatSubRip *.srtsrtMillisecondsWebVTT *.vttvttMillisecondsYouTube *.sbv

sbvMillisecondsSpruce Subtitle *.stl

stlFramesAdvanced Substation Alpha *.ass

ssa/assCentiseconds (interpreted as Milliseconds)

FAQ

Q. Can I edit the Start and/or End time-codes of the subtitle from the Subtitling Data control?

A. Yes, editing the time-codes of the subtitle is fully supported. This feature permits a linguist to adapt the Start and End times to align better with the translated content.

Q. How does the plugin recognize which time-code format (i.e. milliseconds vs frames) to use?

A. The time-code format is read as milliseconds, unless the subtitle document has information to suggest otherwise. In the case that the format should be read as Frames, select the option 'Frames' from Time-code combobox in the Subtitle Preview Options dialog. Please refer to Time-code Format for more information.

Q. Can I merged across paragraphs when working with the supported subtitle formats?

A. Merge across paragraphs is now fully supported with the latest release of the File Types (WebVTT, SBV, STL & ASS) version 1.0.3+ and Subtitling plugin version 1.0.8+. All context and structure related to the paragraphs that have been fully merged to the parent paragraph are excluded from the native file that is regenerated with the target content.

Unmerging segments is no longer supported from Studio 2019 SR1 CU3.

Q. Can I add a new subtitle record or remove an existing one from the list of subtitles visible from the Studio Data Control?

A. This is currently not supported, as It would introduce a requirement to manage a structural change to the paragraphs of the document that would then need to be reflected when regenerating the native format.

Q. I would like to add a verification check that is specific to subtitling other than those included with the Studio Subtitling plugin, how can I do that?

A. Check if what you are looking for is not already included as a standard QA check with the other tools (e.g. QA Checker 3.0, Tag Verifier and Terminology Verifier). It is also possible to create/add your own, via the Regular Expressions area in the QA Checker tool. In addition, please take the opportunity to communicate any improvements of this nature to the AppStore team, as we welcome any suggestions/feedback from the community in area's where we can improve the features for future releases.

Q. I don't have a video reference for the subtitle document that I'm working on; will the verification checks still work?

A. Yes, the QA checks will still function correctly without a video reference, if you are working with a document that is recognized correctly by the File Types we have released to support subtitle formats

Q. Can I update the video reference after the project has been created?

A. The video reference can be associated with the subtitle document during project creation or linked to the active document from the editor at a later time. Any updates to the video reference path from the editor are persisted in the project file without affecting the project resources.

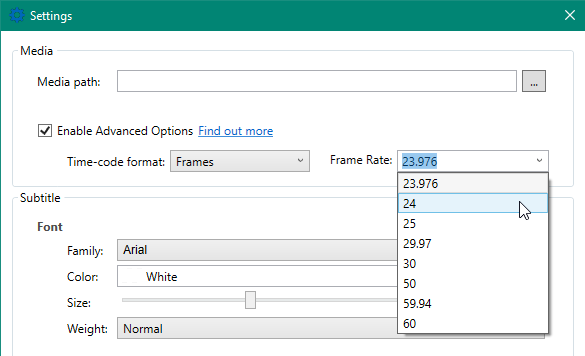

Enabling Advanced Options

The Time-code format and Frame Rate are some of the more sensitive settings when it comes to working with a subtitle document. Modifying these settings can affect precision in accurately calculating the position in the video from the subtitle Start and End time-codes, especially when switching between time-code formats (milliseconds vs frames). It is for this reason that they are categorized as advanced options and disabled by default. The user can however choose to override these settings in cases where the Frame Rate interpreted by the plugin was incorrect or possibly, the user prefers to work in different Time-code format (e.g. in Frames as opposed to Milliseconds).

Procedure

- Go to Subitling Preview > OptionsCheck the 'Enable Advanced Options' checkbox

Note: The Time-code format and Frame Rate options are enabled when the checkbox is checked and can be updated by the user. The only exception to this is when a video is also assigned; then the Frame Rate remains disabled as it is inherited from the video.

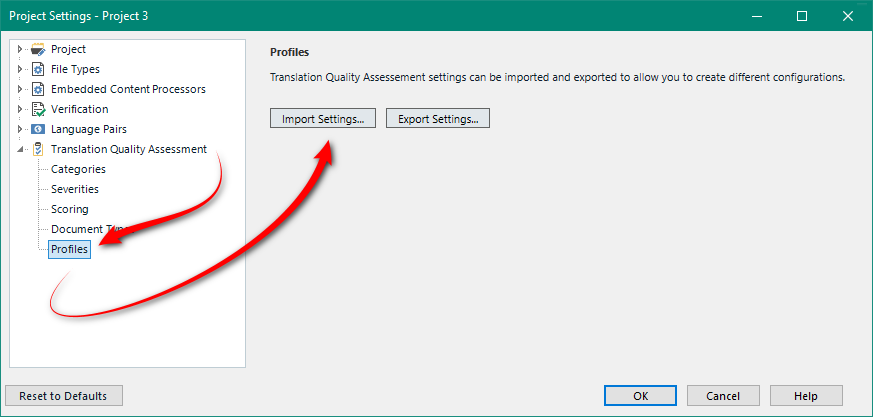

FAR Methodology TQA Settings

Trados Studio Professional provides the ability to create your own TQA profile and share it via an *.sdltqasettings file. The "FAR Methodology TQA Settings" is a TQA profile that has been created by the Trados AppStore Team for use with subtitling projects. It is based on the FAR model published by Professor Jan Pedersen of Stockholm University and is an attempt at creating a generalized model for assessing quality in interlingual subtitling.

The settings file can be downloaded from the RWS AppStore via this link. The download is a zip file. So to install this you download the zip, unzip the contents which will give you a FAR Model 1.0.sdltqasettings file, and then you simply import it here:

It's based on these three things:

- Functional equivalence (do the subtitles convey speaker meaning?)

- Acceptability (do the subtitles sound correct and natural in the target language?)

- Readability (can the subtitles be read in a fluent and non-intrusive way?)

For more information on this model please refer to this paper published by Prof. Jan Pederson: The FAR model: assessing quality in interlingual subtitling.

IMPORTANT NOTE: TQA is a feature available in Studio for the Professional version only. Other versions of Studio can only use this model when working with a project package that has been prepared with the TQA profile configured.

Frame Rate

We use the Frame Rate to calculate the position of the frames in the document, whether or not a video is loaded. For this reason, it's important to check and associate the correct Frame Rate from the settings before you start working on the document, as the incorrect value could eventually result in a reduction of accuracy when calculating the position of the frames in the video.

The Frame Rate is automatically interpreted from the Start and End time-codes of the subtitles in the document when it is loaded in the editor for the first time. Depending on the time-code format of the document, the method in which the Frame Rate is identified can differ, as follows:

FramesWe first recover the highest frame identified from the Start and End times of the subtitles in the document and then select the nearest Frame Rate greater than that value from the Standard Frame Rates.MillisecondsWe use a regression technique in interpreting the Frame Rate when loading a document whose time-code format is in milliseconds. We create a regression model that contains the values for all milliseconds-per-frame * frame for all of the Standard Frame Rates. The Frame Rate is then identified by evaluating the similarity between the Start and End times of the subtitles in the document against the values in the model.

Understanding this, the Frame Rate identified through this method can be considered reliable only in cases where the milliseconds reflect the actual position of the Frames in the video with a limited tolerance.

Modifying the Frame Rate

It's important that the Frame Rate value chosen coincides with the Frame Rate that was used originally when creating the native file. There is no value in the native subtitle file that helps us identify what Frame Rate was used by the author initially when creating it, therefore we attempt to ascertain this from the time-code data of subtitle document the first time it is loaded in the Studio Editor. This may or may not be accurate depending on the time-code format (Frames or Milliseconds) and time-code data recovered.

The user can modify the Frame Rate by either loading a video or manually assigning it from the settings window.

- Load a video

- Open the Subtitle settings windowBrowse and load a video that coincides with the subtitles in the document. Note: The Frame Rate will be inherited from the FPS of the Video and prevent the user from modifying it.Click OK

- Manually editing

- Open the Subtitle settings windowEnable Advanced OptionsSelect from the list of Frame Rates available in the combobox or enter a new value that coincides with the subtitles in the document.Click OK

Warnings

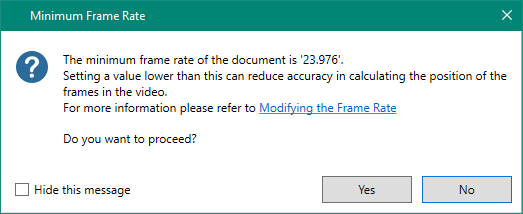

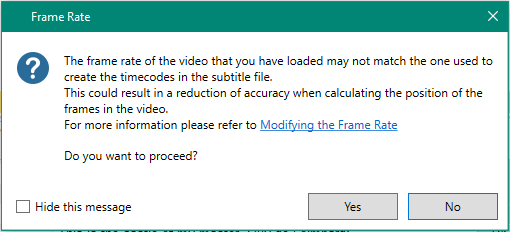

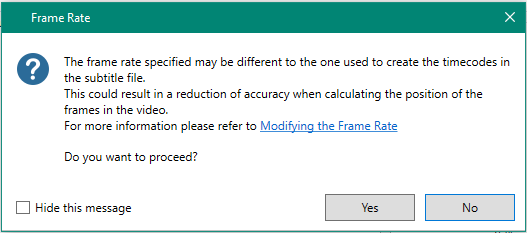

The following warning messages are displayed to the user when the Frame Rate is updated, to help them become more aware of possible consequences in assigning an incorrect value. The types of issues that you can expect to see when modifying the Frame Rate are related to the precision in accurately calculating the position (frames or milliseconds depending on the time-code format) within the video; which becomes more evident when the switching between time-code formats.

Eventually, these warning messages can be hidden by checking the checkbox 'Hide this message', if they are no longer needed.

- The user attempts to assign a Frame Rate that is lower than the minimum value identified from a document (Frames time-code format only). This will force the plugin to recalculate the frame position for each of the subtitles and potentially reduce accuracy is locating the correct position in the video.

- A video is loaded with a Frame Rate that is different to the current value.

- A Frame Rate is assigned that is different to the current value.

Standard Frame Rates

Keyboard Shortcuts

The Subtitling plugin has as number of keyboard shortcut actions that you can adapt to fit your needs at any time.

Procedure

- Go to File > Options and select Keyboard shortcuts > Studio SubtitlingLocate the row containing the action for which you want to create or edit the shortcut.Select the cell in the Shortcut column (where the current keyboard shortcut is displayed, if one exists).Press the key, or combination of keys, that you want to use for the shortcut. The key combination appears in the Shortcut column.Note: Shift+F10 and Ctrl+Shift+F10 are reserved for internal use and cannot be assigned as shortcut keys. Similarly, single keys such as the numbers on your keypad cannot be assigned due to some limitations.Click outside the row you just edited. SDL Trados Studio checks whether the new shortcut is already in use. If the shortcut is already in use, the entire row is highlighted in red and you must remove or edit the shortcut.Select OK to save the shortcut and close the Options dialog.

Subtitling Preview view

Assign single key keyboard shortcuts

Assigning single key keyboard shortcuts from within Studio is not supported. For instance, it is not possible to assign the number 1 or 2 from the numpad on your keyboard as a hotkey in the Keyboard Shortcut Options.

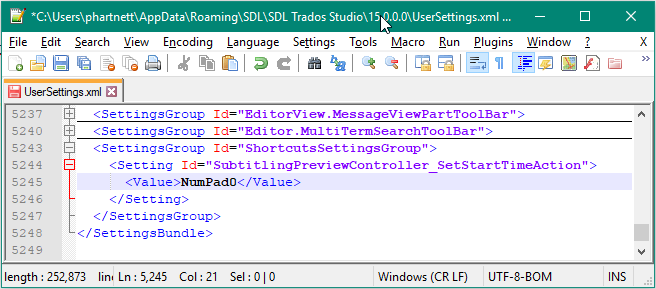

We can however work around this limitation and manually assign keyboard shortcuts by updating settings file directly, as follows:

Procedure

- Close SDL Trados Studio.Open the UserSettings.xml file in a text editor; located here: C:\Users\<UserId>\AppData\Roaming\SDL\SDL Trados Studio\15.0.0.0\

Note: replace <UserId> with the user id that signed in to the OS.

- Locate the ShortcutsSettingsGroup section. If it is not present in the file, then simply create a new settings group<SettingsGroup Id="ShortcutsSettingsGroup"></SettingsGroup>Add the setting manually by associating the appropriate keyboard shortcut Id. Make reference to the Settings ID associated with each of the keyboard shortcuts from the Keyboard Shortcuts when identifying the appropriate action.<Setting Id="SubtitlingPreviewController_SetStartTimeAction"> <Value>NumPad0</Value></Setting>

Example:

Merge across paragraphs support

Merge across paragraphs is now fully supported with the latest release of the File Types (WebVTT, SBV & STL) version 1.0.3+ and Subtitling plugin version 1.0.8+. All context and structure related to the paragraphs that have been fully merged to the parent paragraph are excluded from the native file that is regenerated with the target content.

The feature to merge across paragraphs in Studio is however limited, so far as there is no action to un-merge paragraphs after they have been merged, other than performing an undo operation in the editor. In the case where you perform an undo operation to un-merge merged paragraphs, then you will subsequently need to click on the Reload button from the Subtitling Preview control to refresh the data from both the Studio editor and Subtitling data-grids.

Merge across paragraph settings

There are two options that permit the user to influence the behavior of merging across paragraph boundaries that are accessible from platform and project settings.

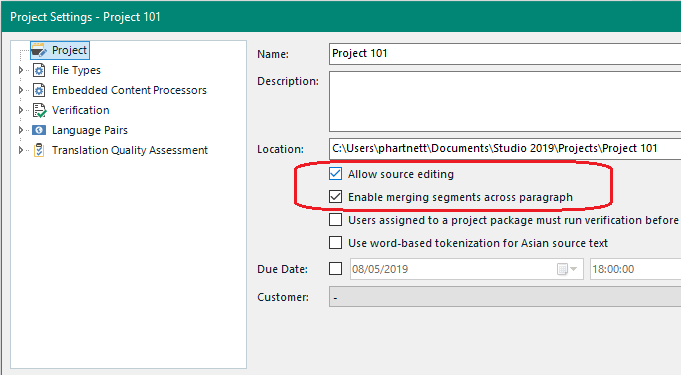

Project Settings

Location: Project Settings>Project

- Allow source editing {checkbox}

- Enable merging segments across paragraph {checkbox}

Platform level settings

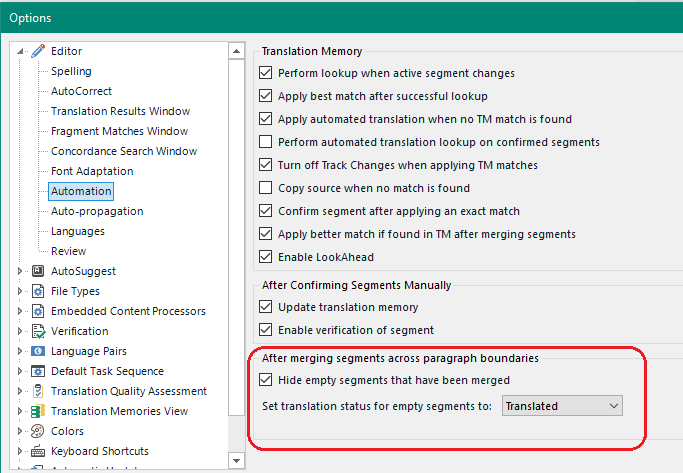

Location: File>Options>Editor>Automation

- Hide empty segments that have been merged {checkbox}

- Note: If this option is not checked, the empty segments will be visible in the Studio editor, however they will remain hidden in the subtitling preview grid as long as the segment pair is identified as merged.

- Set translation status for empty segments to {combobox: translation status}

Minimum time span between subtitles

A requirement has been identified for some agencies to have a 2 to 4 frame gap between titles. The gap triggers the viewer to look down and read the next subtitle. Without gaps between titles, it is sometimes difficult to identify when the title is changing, especially when they are formatted similarly. The BBC demands a 3-frame gap in their specification, however anything between 2 to 4 frames is acceptable.

Procedure

- Subtitling Data view > Toolbar > Set minimum time span between subtitlesSelect/set the appropriate time-code format and valueClick OK

Time-code Format

The Time-code format can be read as Frames or Milliseconds. What this means is, the fractional digits in the time-code (e.g. the last digits after the period) can represent frames of the video or milliseconds in time.

Undo/Redo Support

Currently, there is no support to automatically refresh the data in the Subtitling views when the Undo and Redo actions are executed from the Studio Editor. As a workaround, the user can click on the Refresh button from the Subtitling views to refresh the UI content in the controls.