Hi everyone,

I am using Trados Studio 2024 with the integrated AI Assistant to improve translations and text quality via the OpenAI API. I have set up multiple Assistants on the OpenAI platform, each tailored to my specific needs.

My Goal:

I want Trados Studio to call a specific OpenAI Assistant, rather than always using the default Assistant.

My Current Configuration:

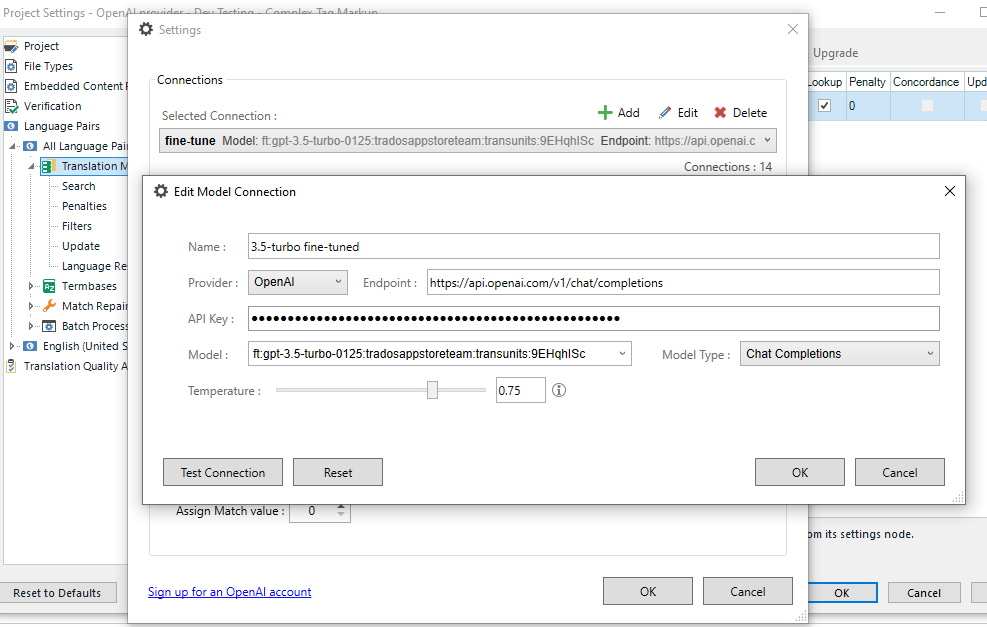

- Provider: OpenAI

- Endpoint:

api.openai.com/.../completions - Model:

gpt-4-turbo - API Key: My personal OpenAI API key

- Completion Type: Chat Completion

However, there is no option in Trados Studio AI Assistant settings to specify an assistant_id. As a result, every request defaults to OpenAI’s standard Assistant instead of my custom-trained Assistant.

What I Have Tried So Far:

- Changed the API endpoint to

api.openai.com/.../threads→ Trados throws a connection error. - Created a dedicated API key for my desired Assistant → Trados still defaults to the standard Assistant.

- Tried a system prompt workaround → didn't work.

My Question:

Is there a way to select a specific OpenAI Assistant in Trados Studio 2024, instead of always using the default one?

If not directly, is there a workaround, or could this be achieved through a script or plugin?

Looking forward to your insights and solutions!

Thanks in advance!

Translate

Translate