I'm using MT Enhanced to connect to our AutoML models and I am finding that the handling of internal tags is really bad. At first glance, it looks like the plugin breaks up the sentence into chunks, send those chunks to the MT engine and then concatenates the responses of the MT engine to form the output. If that is true, that cannot lead to quality results.

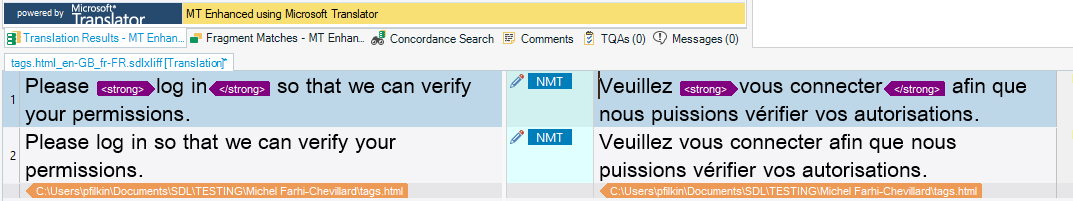

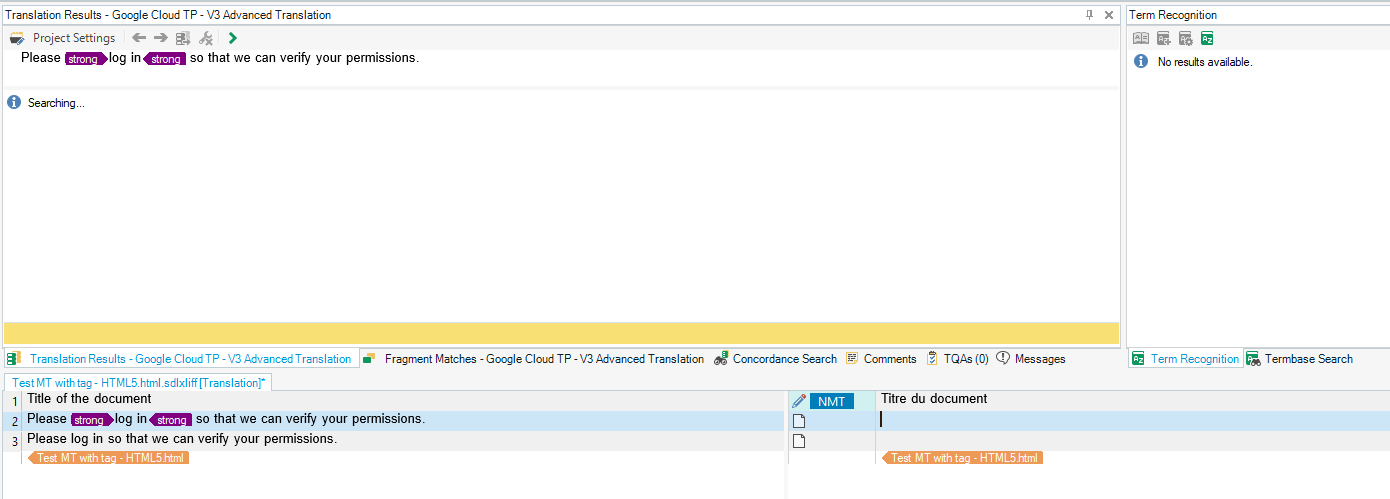

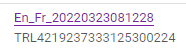

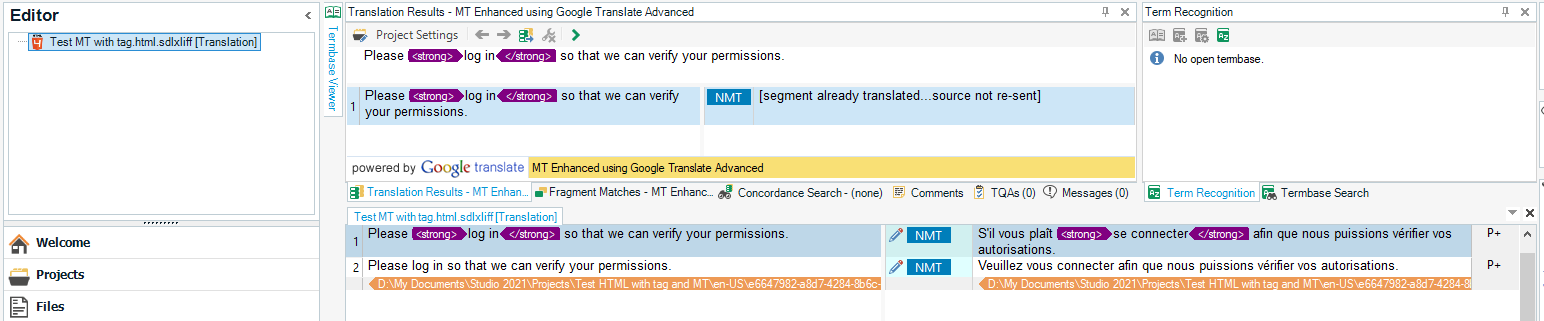

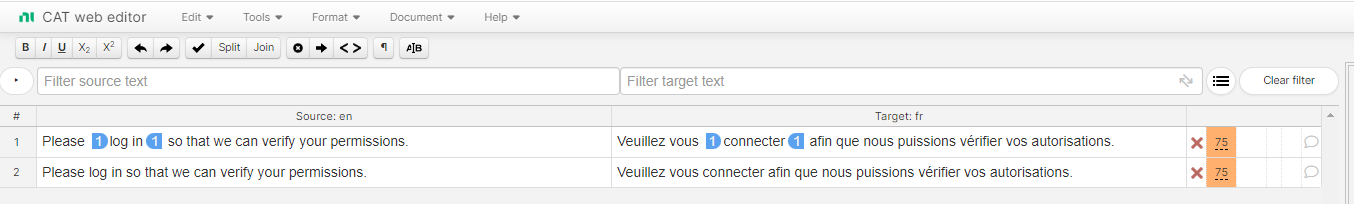

Digging further, I sent the exact same file through MT with Trados 2021 + MT Enhanced + my Google AutoML engine vs Phrase TMS + Phrase Translate + my Google AutoML engine. And to my surprise, the results are completely different, and clearly in favor of Phrase.

vs

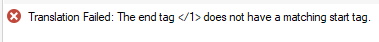

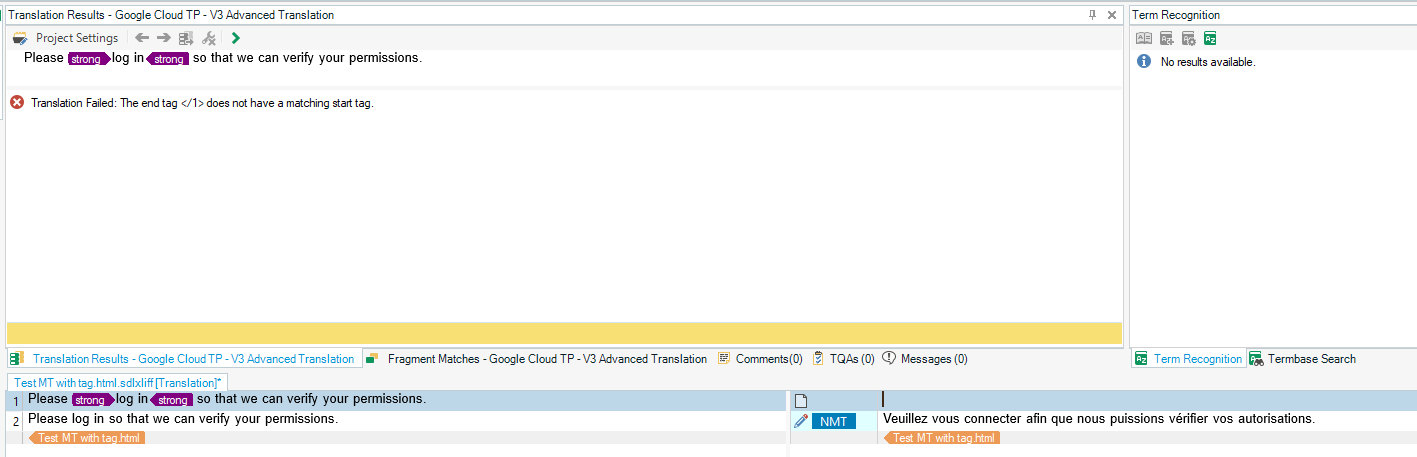

As you can see, the Phrase connector comes out with the same translation, whether "log in" is surrounded by a strong tag or not. However, the Trados connector doesnt and comes up with a nearly non-sensical output in French.

Hence my question, why is Phrase able to handle the internal tags properly during their connection to the same AutoML engine, and why can't the MT Enhance plugin match the same results?

By the way, when using a Google generic or MS engine in MT Enhanced, the results are no better. Only when using the DeepL connector do I get good results for this sentence. But of course, I do not want to use a generic DeepL engine, I want to use a trained AutoML engine for my needs.

Here is my HTML file, in case you would like to reproduce:

<html> <p>Please <strong>log in</strong> so that we can verify your permissions.</p> <p>Please log in so that we can verify your permissions.</p> </html>

Thank you.

Generated Image Alt-Text

[edited by: RWS Community AI at 4:36 PM (GMT 0) on 14 Nov 2024]

Translate

Translate