I switched from "Open AI translator" to "AI Professional". Now I ran into several situations where the tags of the source text is not respected by AI Professional (whereas both NM I use, DeepL and Language Weaver work as expected).

My current version of AIP is 1.0.1.2 (from February 1st), but the effect was there in the previous version as well.

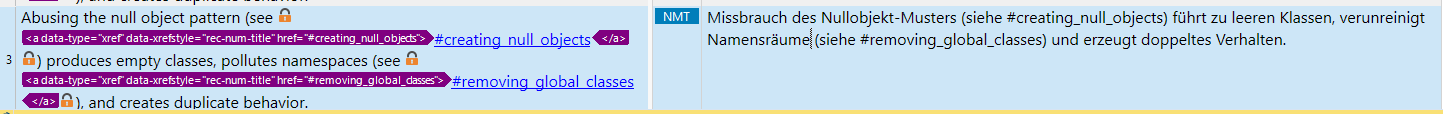

Here example 1:

And example 2:

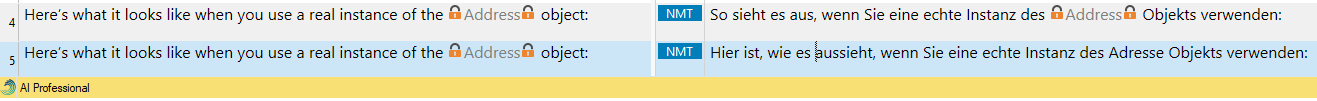

I checked the appropriate option in the AIP config (gpt-3.5-turbo shows the same problem, btw, as expected):

Generated Image Alt-Text

[edited by: RWS Community AI at 4:49 PM (GMT 0) on 14 Nov 2024]

Translate

Translate