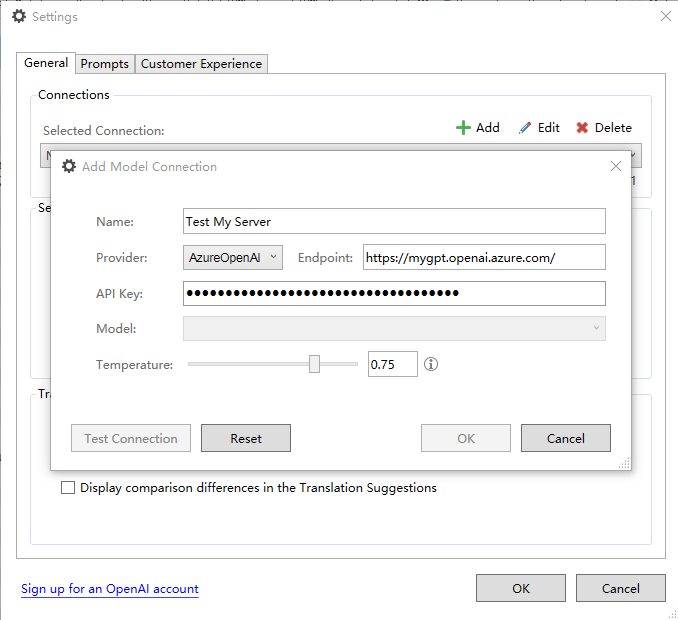

Anyone tested to use the AI Professional (appstore.rws.com/.../239) plugin to connect to your own AzureOpenAI endpoint to provide GPT4 suggestion?

I tried to config my Azure OpenAI instance, but can't get it done. This plugin can't read the model list when I input my AzureOpenAI endpoint.

Generated Image Alt-Text

[edited by: Trados AI at 7:34 AM (GMT 0) on 28 Mar 2024]

Translate

Translate