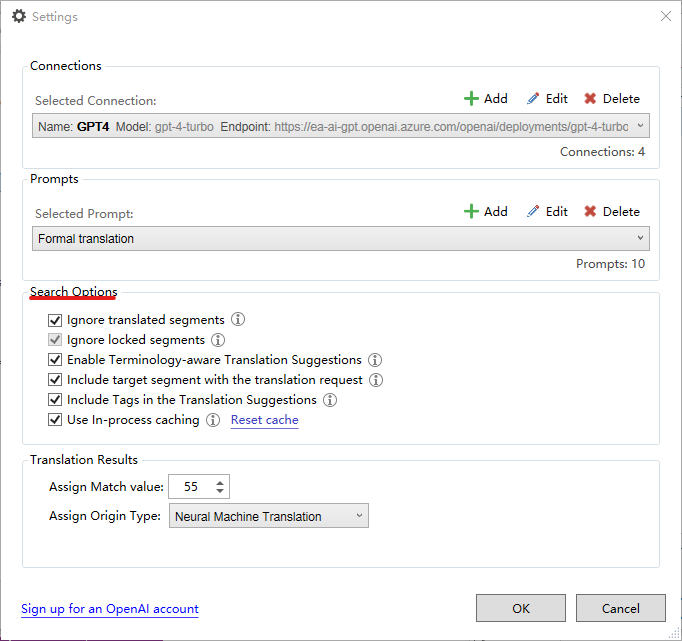

Anyone can help in explain the each items in search option? the plugin document dose't say anything about it.

thanks in advance!

Generated Image Alt-Text

[edited by: RWS Community AI at 4:37 PM (GMT 0) on 14 Nov 2024]

Anyone can help in explain the each items in search option? the plugin document dose't say anything about it.

thanks in advance!

Hi Henry Huang ,

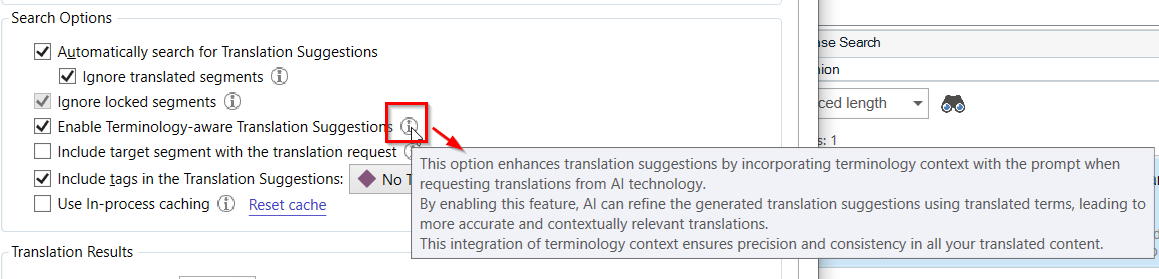

The search options are intricately tied to the prompt queries forwarded to the AI to enrich translation suggestions. Each option is accompanied by a tooltip accessible by hovering over the “ i ” providing detailed information.

This elucidates the functionality of each option, ensuring a comprehensive understanding. Should further clarification be required, please do not hesitate to inquire.

Regards,

Oana

Oana Nagy | RWS Group

_____________

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

Dear Oana,

Yes, I see those words, but I need to know how to make it work.

e.g. Enable Teminology - I have 5 termbases, does it only work with the 1st one or it works with all 5 termbases?

I'm trying to write a instruction on how to set "Search Options" in AI professioal, but there're 3 items in red are not explained clearly, can anyone help on explain "how does it work" to those 3 iterms?

Search Options

§ Automatically search for Translation Suggestions – If this option is checked, when you click a target field in Trados Editor, the source sentence will be sent out to OpenAI to translate, you don’t need to click the “binoculars” icon manually start the translation

• Ignore translated segments – when the target field had a translation in it already, the source content won’t be sent out to OpenAI to translate

§ Ignore locked segments - when the segment is locked, the source content won’t be sent out to OpenAI to translate

§ Enable Terminology-aware Translation Suggestion – not sure how it works yet?

§ Include target segment with the translation request - not sure how it works yet?

§ Include tags in the Translation Suggestion:

• Tag Id – display tags with an ID number

• Hide Tag – do not display or handle any tags

• No Tag text – only display tag marks

• Partial Tag Text – only display keywords of the tag

• Full Tag Text – display entire tag in text model

§ Use In-process caching – Don’t know what kind of information are cached, and the size of cache are?

• Reset cache – clear previous cached content

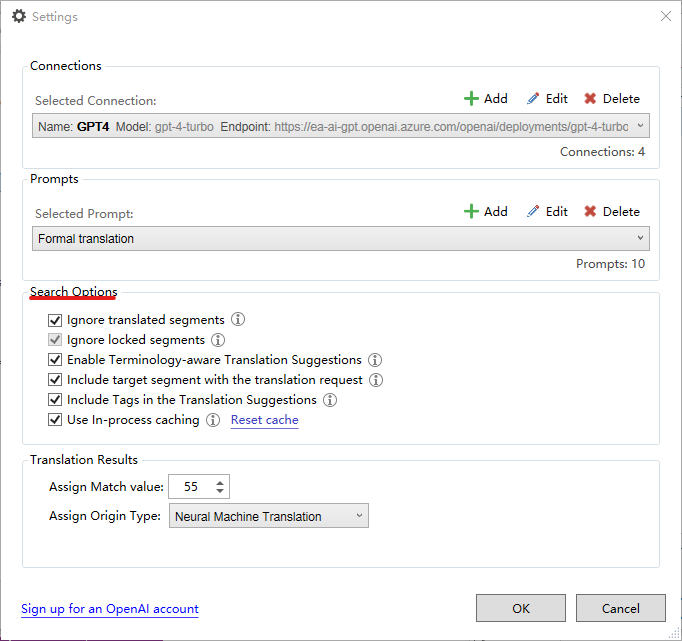

Let me briefly answer your "3 items in red":

1. Enable Terminology-aware Translation Suggestion – not sure how it works yet?

By selecting this option, during batch pre-translation and segment-by-segment translation, the translations generated by the LLM will automatically be replaced with the translations of existing terms in the terminology database, whether there is one or several terminology databases.

2. Include target segment with the translation request - not sure how it works yet?

For TM fuzzy matches or NMT outputs, when sending translation requests to the LLM, the matched translations will be included as contextual information to help the LLM generate higher-quality and more stylistically consistent translations. Typically, it is more cost-effective and quality-efficient to send translations of sentences with only fuzzy matches within a certain configuration range (for example, 60%-90% fuzzy match) to the LLM, but AI Professional currently does not support setting a range for match values. In short,enabling this option helps improve the quality of translations generated by the LLM, but it will consume more tokens.

3. Use In-process caching – Don’t know what kind of information are cached, and the size of cache are?

When requesting LLM for translation, prompts and the original text are usually sent. To save costs or tokens, the generated translations will be stored as cache and can be reused instead of sending the same content every time, primarily from a cost perspective. However, the cache will be reset after Trados Studio restarts. Of course, it can also be reset at any time as needed.

Oana Nagy's reply, especially the screenshot, should have clarified the issue. You might not have understood it immediately; I hope my reply can be helpful.

Note:AI Professional can be used for batch pre-translation as well as generating translations segment by segment. The prompts play a role in segment-by-segment translation; that is, by selecting a prompt and clicking on the magnifying glass (search) icon, a corresponding new translation will be generated.

This demo video is also very helpful:

ELEVATE 2024 - Bonus sessions - My favorite AI Professional app prompts - Martín Chamorro - www.trados.com/.../

Thanks Joe for your kindly explanation, that's really helpful!