Dear all,

we deployed an Azure OpenAI model in our MS Azure infrastructure, according to our IT team it should be up and running.

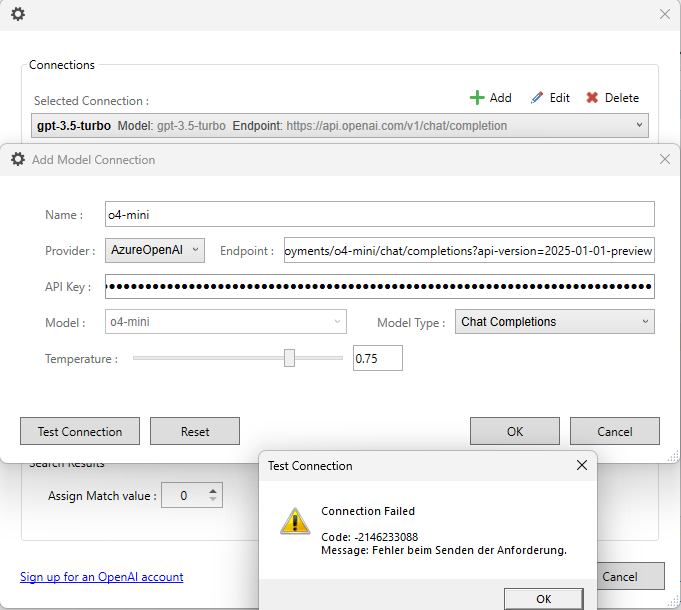

When setting up the connection details in the Trados Studio plugin I get the following error message (see screenshot below).

It doesn't seem to be an API error (https://platform.openai.com/docs/guides/error-codes/api-errors).

The Studio log file does not give more details:

OpenAI.Provider.API.AIProvider: 2025-08-20 17:16:49.4640 Info Method: "TestConfiguration", Message: "Fehler beim Senden der Anforderung."

OpenAI.Provider.API.AIProvider: 2025-08-20 17:17:01.3201 Info Method: "TestConfiguration", Message: "Fehler beim Senden der Anforderung."

I'm using Trados Studio 2024 SR1.

Any suggestions?

Regards,

Bruno

Generated Image Alt-Text

[edited by: RWS Community AI at 4:18 PM (GMT 1) on 20 Aug 2025]

Translate

Translate