- Kubernetes cluster with node CPU/RAM totalling up to the minimum requirement.

e.g. AKS, EKS, GKE - Autoscaling is enabled in Kubernetes cluster. (optional)

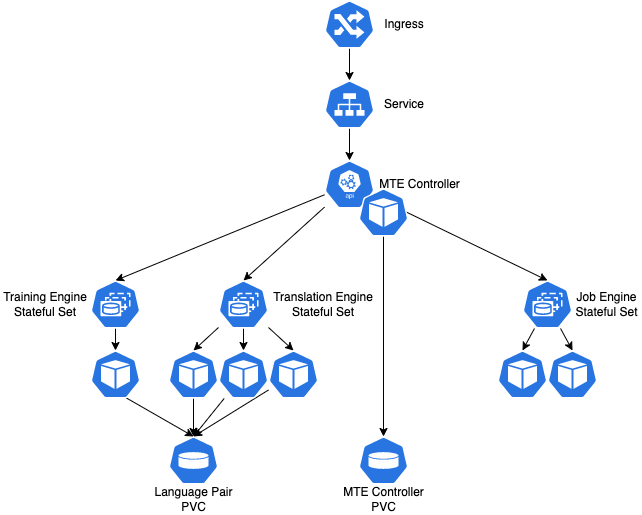

- Ingress controller to access Kubernetes services.

e.g. NGINX - Storage classes in Kubernetes that support both RWO & RWX volume types.

- Edge Controller host is deployed as a pod in Kubernetes and Edge UI & API are published as a service.

- Job Engines, Translation Engines & Training Engines are deployed as Stateful Sets and could be configured to auto scale based on the pod CPU usage or predefine the number of replica pods.

- Language Pairs and the Edge configuration is saved in persistent volumes.

- All pods use the same docker base image.

- Helm charts & example “values.yaml” files are provided by RWS for easy deployment.

- Upgrades & rescaling of Edge configuration is possible with helm upgrades.

- Training Engines require GPU nodes in the Kubernetes cluster for better performance.

- LW Edge license & default admin accounts are saved as Kubernetes secrets.

- Persistent volume of the Controller pod could be a standard RWO storage class.

- Persistent volume of Language Pairs requires a high performance, case sensitive RWX storage class.

- Language Pairs are deployed to the persistent volume using Kubernetes Jobs.

- Bootstrap Language Weaver Edge admin user.

- Install new Language Pairs.

- Increase/Decrease the size of the pool of Job Engines, Training Engines or add/modify the pool of Translation Engines for a given Language Pair.

- Auto scale the number of Translation Engines, depending on the system load.

- Controller pod.

- Job Engine pods - all pods share the same environment variables.

- Translation Engine pods - each Language Pair has its own environment variables.

- Training Engine pods - all pods share the same environment variables.

Translate

Translate