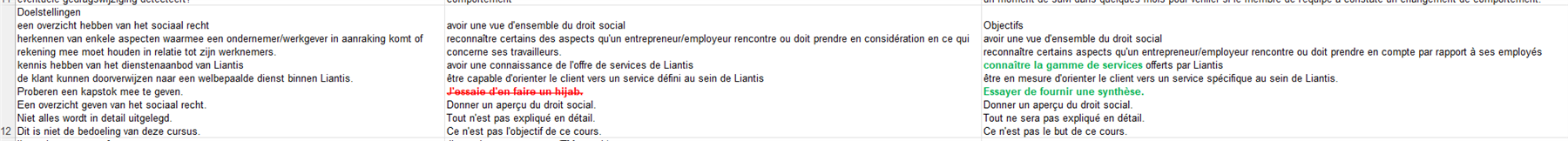

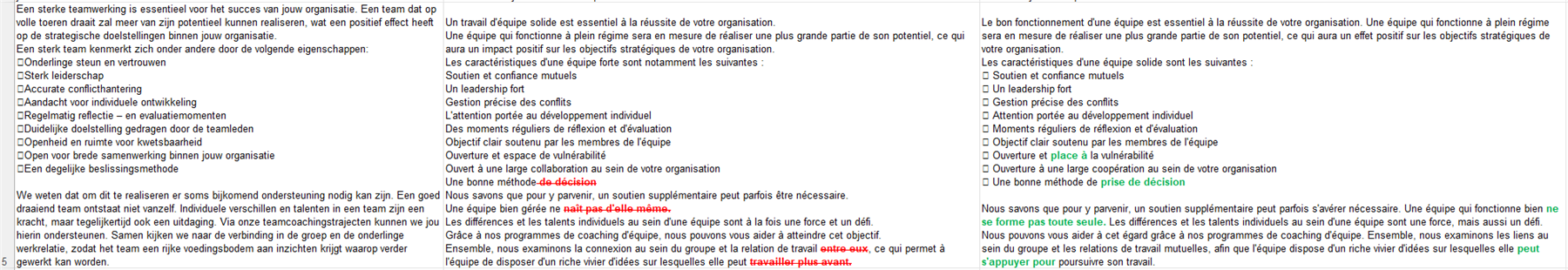

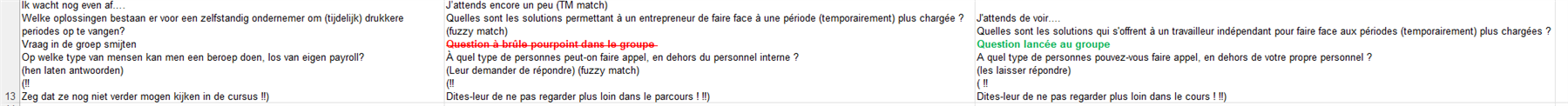

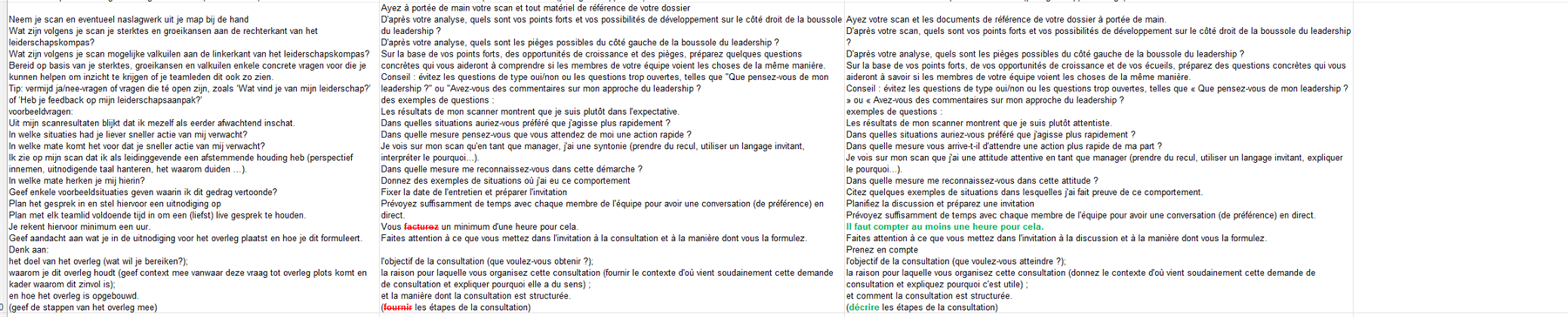

Our company has a DeepL Pro subscription. We noticed a discrepancy between the translations provided by the free, online version of DeepL, and the ones provided by the DeepL API for Trados Studio 2021.

We assumed the API provided the same translations as the online version, but after running many tests in various language combinations, it’s safe to say that this is not the case.

The online version is always noticeably better than the one provided by the API, but obviously we’d rather use the API directly in the CAT tool.

Could you please let us know if this can be fixed in some way?

Translate

Translate