Dear all,

Is there any way (with any Trados function, plugin, or third party) to calculate the TUs included in an SDLTM without converting into TMX?

Thanks!

Giannis

Dear all,

Is there any way (with any Trados function, plugin, or third party) to calculate the TUs included in an SDLTM without converting into TMX?

Thanks!

Giannis

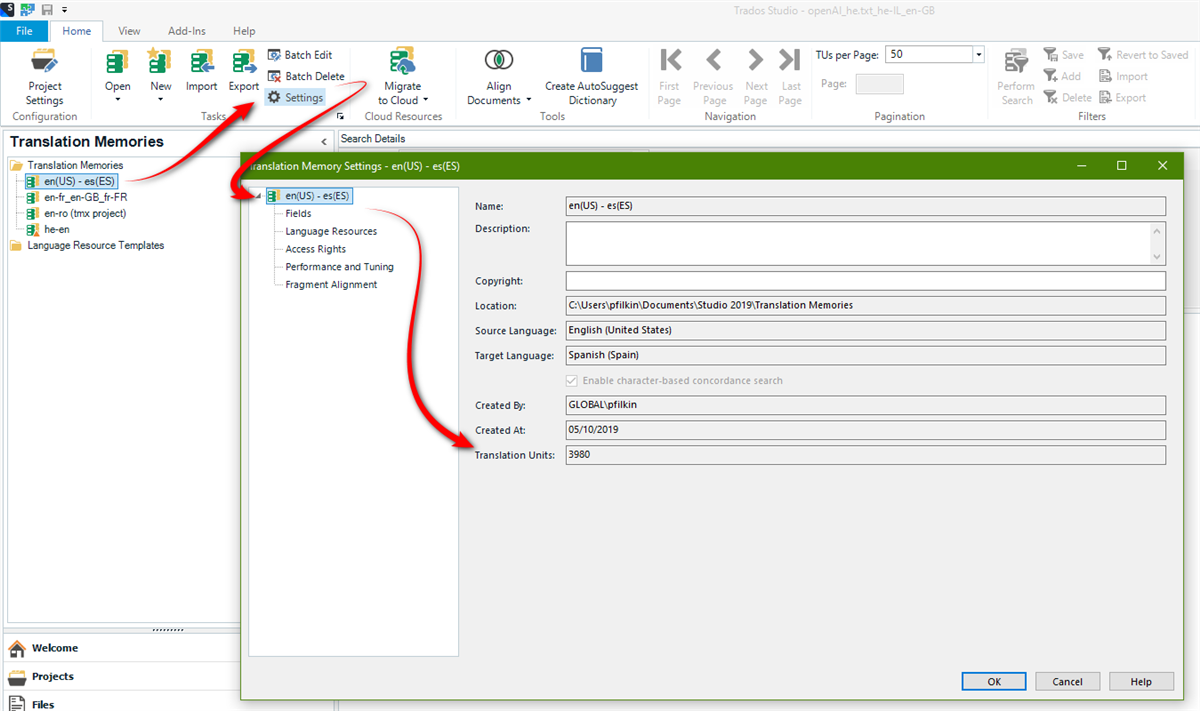

I guess the easiest way is to look in the settings for the TM:

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

Dear Paul,

Thank you for your prompt reply!

This looks great, but as I need to calculate the TUs for numerous TMs, is it possible to get an average based on the file size?

You could test some to get an idea and then apply the results of the tests to the files you have. I doubt it'll be an accurate task but might yield some results you could work with. I did a couple of tests to see what sort of variance there is with this idea:

5,467,086,848 Bytes => 712,774 TU's => 7,676 Bytes/TU

2,570,682,368 Bytes => 1,202,477 TU's => 2,140 Bytes/TU

298,516,480 Bytes => 56,322 TU's => 5,298 Bytes/TU

141,426,688 Bytes => 56,160 TU's => 2,515 Bytes/TU

136,552,448 Bytes => 16,840 TU's => 8,110 Bytes/TU

7,094,272 Bytes => 4,371 TU's => 1,624 Bytes/TU

As you can see... too much variance to be able to come up with even a reasonable estimate. There are many reasons for this such as the data types and redundancy.

SQLite allows for a wide range of data types, including integers, strings, and blobs, among others. Depending on the data types used in the database, the size of each record can vary significantly. For example, if a large number of fields are defined as TEXT or BLOB, the database can become significantly larger than if those fields were defined as smaller data types like INTEGER.

When a record is deleted from a table, the space it occupied is marked as unused, but it is not immediately released back to the operating system. Instead, this unused space is left in the database file and can be reused by new records in the future. Over time, as records are deleted and new records are inserted, the database file can become fragmented, meaning that the unused space is scattered throughout the file. This can cause the file to become larger than it needs to be, which can lead to slower performance and higher storage costs.

There are more reasons but these two are probably the most likely in terms of an SDLTM. So it's not really as straightforward as you'd have hoped!

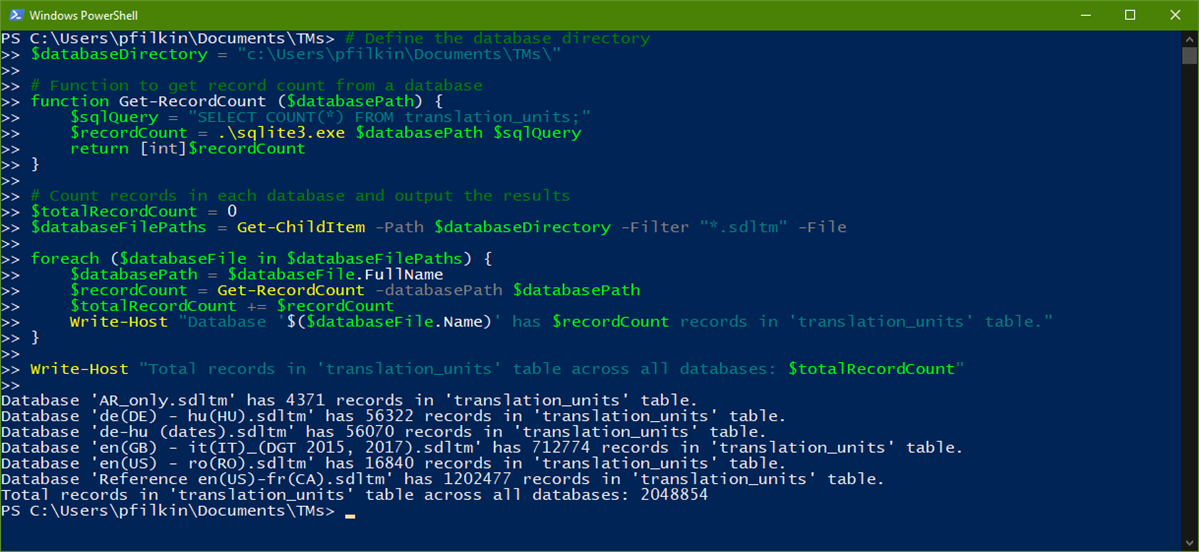

One idea... with a little help from ChatGPT to write the script, is to put all your TMs into one folder. Then put the sqlite3.exe file into the same folder (I zipped it here in case you don't know where to find it - 1207.sqlite3.zip) and then run this powershell script from the same location:

# Define the database directory

$databaseDirectory = "c:\Users\pfilkin\Documents\TMs\"

# Function to get record count from a database

function Get-RecordCount ($databasePath) {

$sqlQuery = "SELECT COUNT(*) FROM translation_units;"

$recordCount = .\sqlite3.exe $databasePath $sqlQuery

return [int]$recordCount

}

# Count records in each database and output the results

$totalRecordCount = 0

$databaseFilePaths = Get-ChildItem -Path $databaseDirectory -Filter "*.sdltm" -File

foreach ($databaseFile in $databaseFilePaths) {

$databasePath = $databaseFile.FullName

$recordCount = Get-RecordCount -databasePath $databasePath

$totalRecordCount += $recordCount

Write-Host "Database '$($databaseFile.Name)' has $recordCount records in 'translation_units' table."

}

Write-Host "Total records in 'translation_units' table across all databases: $totalRecordCount"

You'll need to change the path to your TMs in this line:

$databaseDirectory = "c:\Users\pfilkin\Documents\TMs\"

But if you run this script you'll get this sort of output in a few seconds:

Database 'AR_only.sdltm' has 4371 records in 'translation_units' table.

Database 'de(DE) - hu(HU).sdltm' has 56322 records in 'translation_units' table.

Database 'de-hu (dates).sdltm' has 56070 records in 'translation_units' table.

Database 'en(GB) - it(IT)_(DGT 2015, 2017).sdltm' has 712774 records in 'translation_units' table.

Database 'en(US) - ro(RO).sdltm' has 16840 records in 'translation_units' table.

Database 'Reference en(US)-fr(CA).sdltm' has 1202477 records in 'translation_units' table.

Total records in 'translation_units' table across all databases: 2048854

So that is pretty cool! Looks like this:

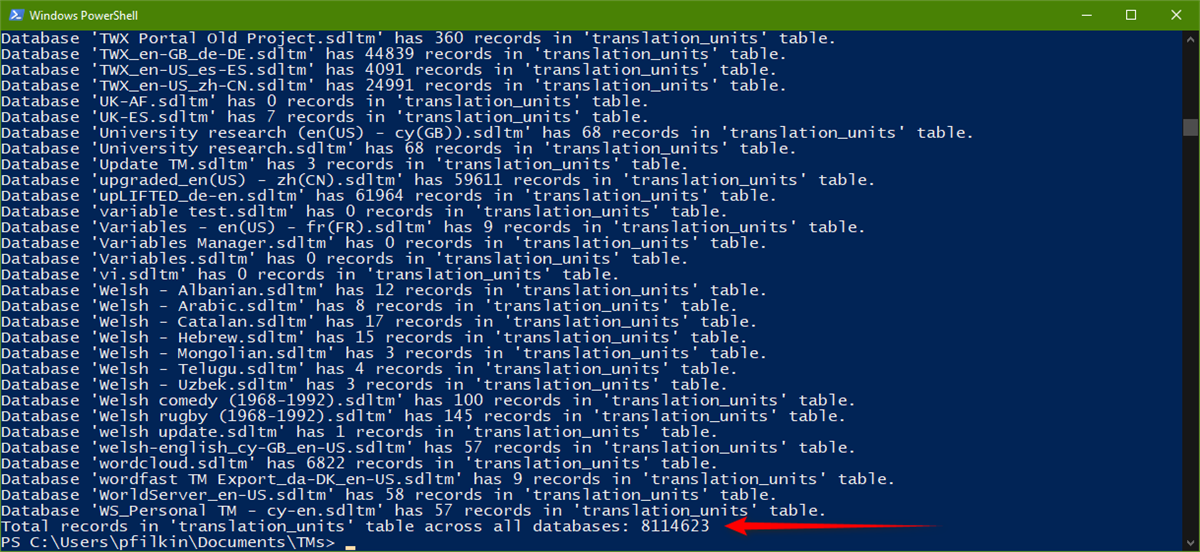

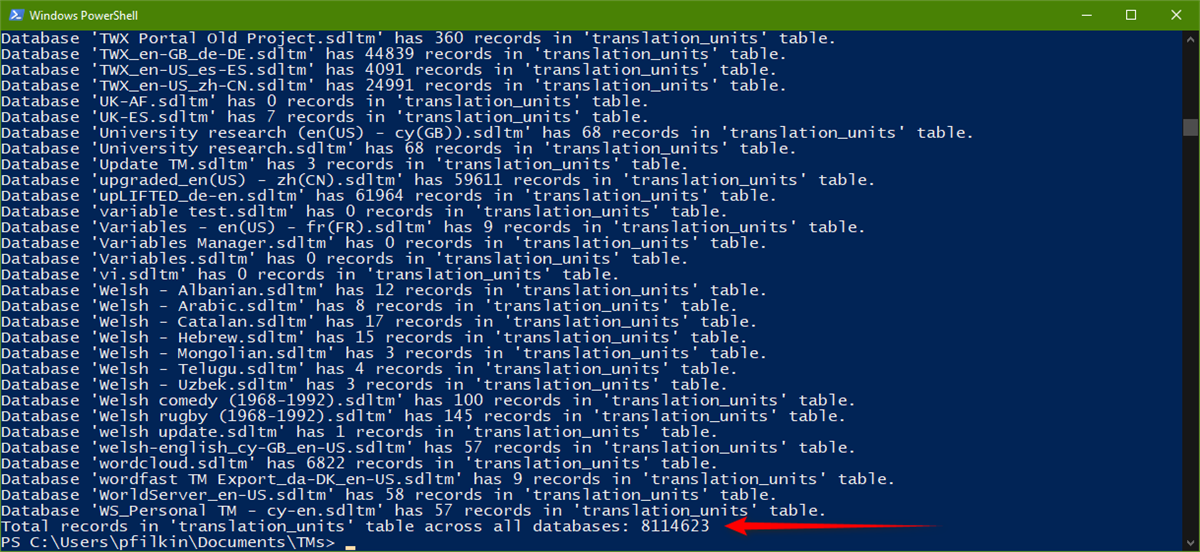

In fact I enjoyed that so much I searched for every SDLTM on my computer and copied them into one folder to test again... so 451 translation memories. Took around 40 seconds to run through them alI think:

So a nice idea... thanks for that :-) I'll keep that script somewhere safe!

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

You could test some to get an idea and then apply the results of the tests to the files you have. I doubt it'll be an accurate task but might yield some results you could work with. I did a couple of tests to see what sort of variance there is with this idea:

5,467,086,848 Bytes => 712,774 TU's => 7,676 Bytes/TU

2,570,682,368 Bytes => 1,202,477 TU's => 2,140 Bytes/TU

298,516,480 Bytes => 56,322 TU's => 5,298 Bytes/TU

141,426,688 Bytes => 56,160 TU's => 2,515 Bytes/TU

136,552,448 Bytes => 16,840 TU's => 8,110 Bytes/TU

7,094,272 Bytes => 4,371 TU's => 1,624 Bytes/TU

As you can see... too much variance to be able to come up with even a reasonable estimate. There are many reasons for this such as the data types and redundancy.

SQLite allows for a wide range of data types, including integers, strings, and blobs, among others. Depending on the data types used in the database, the size of each record can vary significantly. For example, if a large number of fields are defined as TEXT or BLOB, the database can become significantly larger than if those fields were defined as smaller data types like INTEGER.

When a record is deleted from a table, the space it occupied is marked as unused, but it is not immediately released back to the operating system. Instead, this unused space is left in the database file and can be reused by new records in the future. Over time, as records are deleted and new records are inserted, the database file can become fragmented, meaning that the unused space is scattered throughout the file. This can cause the file to become larger than it needs to be, which can lead to slower performance and higher storage costs.

There are more reasons but these two are probably the most likely in terms of an SDLTM. So it's not really as straightforward as you'd have hoped!

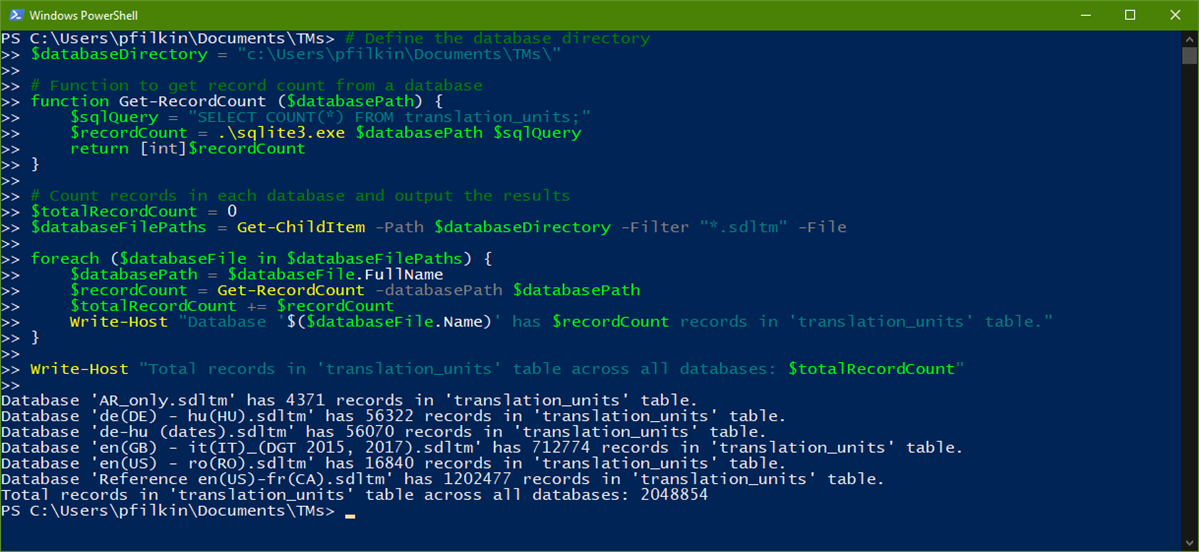

One idea... with a little help from ChatGPT to write the script, is to put all your TMs into one folder. Then put the sqlite3.exe file into the same folder (I zipped it here in case you don't know where to find it - 1207.sqlite3.zip) and then run this powershell script from the same location:

# Define the database directory

$databaseDirectory = "c:\Users\pfilkin\Documents\TMs\"

# Function to get record count from a database

function Get-RecordCount ($databasePath) {

$sqlQuery = "SELECT COUNT(*) FROM translation_units;"

$recordCount = .\sqlite3.exe $databasePath $sqlQuery

return [int]$recordCount

}

# Count records in each database and output the results

$totalRecordCount = 0

$databaseFilePaths = Get-ChildItem -Path $databaseDirectory -Filter "*.sdltm" -File

foreach ($databaseFile in $databaseFilePaths) {

$databasePath = $databaseFile.FullName

$recordCount = Get-RecordCount -databasePath $databasePath

$totalRecordCount += $recordCount

Write-Host "Database '$($databaseFile.Name)' has $recordCount records in 'translation_units' table."

}

Write-Host "Total records in 'translation_units' table across all databases: $totalRecordCount"

You'll need to change the path to your TMs in this line:

$databaseDirectory = "c:\Users\pfilkin\Documents\TMs\"

But if you run this script you'll get this sort of output in a few seconds:

Database 'AR_only.sdltm' has 4371 records in 'translation_units' table.

Database 'de(DE) - hu(HU).sdltm' has 56322 records in 'translation_units' table.

Database 'de-hu (dates).sdltm' has 56070 records in 'translation_units' table.

Database 'en(GB) - it(IT)_(DGT 2015, 2017).sdltm' has 712774 records in 'translation_units' table.

Database 'en(US) - ro(RO).sdltm' has 16840 records in 'translation_units' table.

Database 'Reference en(US)-fr(CA).sdltm' has 1202477 records in 'translation_units' table.

Total records in 'translation_units' table across all databases: 2048854

So that is pretty cool! Looks like this:

In fact I enjoyed that so much I searched for every SDLTM on my computer and copied them into one folder to test again... so 451 translation memories. Took around 40 seconds to run through them alI think:

So a nice idea... thanks for that :-) I'll keep that script somewhere safe!

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

Hi Paul ,

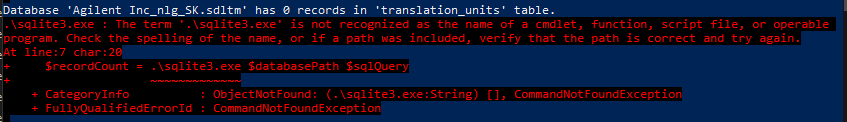

I created a folder where I put the .exe you sent, plus my TMs. I ran your script and Windows PowerShell, but I got the error below. Any idea why? Sorry, I am not familiar with this.

ah, I had to add this line above everything else:

cd C:\\Users\\Giannis.Ouzounis\\Desktop\\TM_COUNT

Dear Paul ,

One more question: Is it possible to modify the script to also read subfolders within a single folder?

Untested... but try replacing this:

# Function to get record count from a database

function Get-RecordCount ($databasePath) {

$sqlQuery = "SELECT COUNT(*) FROM translation_units;"

$recordCount = .\sqlite3.exe $databasePath $sqlQuery

return [int]$recordCount

}

With this:

# Function to get record count from a database

function Get-RecordCount ($databasePath) {

$sqlQuery = "SELECT COUNT(*) FROM translation_units;"

$totalRecordCount = 0

# Get all files in the database path, including subfolders

$files = Get-ChildItem -Path $databasePath -Recurse -File

# Iterate through each file

foreach ($file in $files) {

$recordCount = .\sqlite3.exe $file.FullName $sqlQuery

$totalRecordCount += [int]$recordCount

}

return $totalRecordCount

}

See if that works for you?

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

Maybe optimized slightly and don't forget to change the paths to suit your situation:

# Define the database directory

$databaseDirectory = "c:\Users\pfilkin\Documents\TMs\"

# Function to get record count from a database

function Get-RecordCount ($databasePath) {

$sqlQuery = "SELECT COUNT(*) FROM translation_units;"

$totalRecordCount = 0

# Get all files in the database path, including subfolders

$files = Get-ChildItem -Path $databasePath -Recurse -Filter "*.sdltm" -File

# Iterate through each file

foreach ($file in $files) {

$recordCount = .\sqlite3.exe $file.FullName $sqlQuery

$totalRecordCount += [int]$recordCount

Write-Host "Database '$($file.Name)' has $recordCount records in 'translation_units' table."

}

return $totalRecordCount

}

# Count records in each database and output the results

$totalRecordCount = Get-RecordCount -databasePath $databaseDirectory

Write-Host "Total records in 'translation_units' table across all databases: $totalRecordCount"

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

Thank you, Paul ! Seems to be working great. I think it takes a bit more time than with the original script, but I can't complain since now I don't need to do this manually on 80 subfolders :)