Is there a setting that needs to be activated to have a matching term from a termbase inserted in an NMT suggestion; this is not happening by default; I am using the new NMT provider in Studio 2019.

RWS Community

Is there a setting that needs to be activated to have a matching term from a termbase inserted in an NMT suggestion; this is not happening by default; I am using the new NMT provider in Studio 2019.

There has never been a feature like this, even with the old statistical engines.

We are going to start work shortly on activating dictionary support via the user interface for the SDL MT Cloud plugin but this won't use a termbase directly, at least not in the initial implementation. It will use the dictionaries that are available as part of an SDL MT Cloud account.

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

I thought I remembered uploading my TermBase to LC and getting TermBase entries back in my translations... That was before "custom termbase format" became restricted to business users...

Daniel

I thought I remembered uploading my TermBase to LC and getting TermBase entries back in my translations... That was before "custom termbase format" became restricted to business users...

Daniel

I thought I remembered uploading my TermBase to LC and getting TermBase entries back in my translations

Perhaps you recall the adaptive MT where it could learn from what you entered as the translation? So if you enforced a particular term after a while it would be used.

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

Yes, it did that, too, but I could also upload a TB:

I can't get this to work now, but I seem to remember it worked a year ago. Pretty sure I used an adaptive MT with term replacement based on an uploaded TB.

Daniel

Hi Daniel, hi Paul,

I have asked a similar question - termbase upload/dictionary use with SMT engines worked, but now for NMT engines, there seems to be only a paid solution, see the answers from Dusan Halamka and Quinn Lam in https://community.sdl.com/product-groups/machine_translation/f/forum/28029/corrupted-words-with-nmt-engine

Kind regards

Christine

Thanks Christine Bruckner

as pointed out in the thread you mentioned: "SDL Language Cloud provider does provide Dictionary support, but it is for SMT (Statistical MT) and soon to be deprecated." (emphasis is mine)

This was written two months ago... maybe that explains why using Termbases worked last year and does not seem to work anymore.

I think that USP of SDL's MT solutions has to be integration with Studio and Multiterm, as the MT market is pretty competitive, so I would love to see a terminology option for LC.

Daniel

Hi Daniel,

the dictionary feature for SMT was just a brute-force search & replace of terms coming from the uploaded termbase - not linguistic or artificial intelligence involved. This rather deteriorated the (S)MT results, especially if the termbase/glossary was uploaded "as is" without any cleanup.

So I hope that the terminology option of NMT is more refined - tackling the terminology challenge in NMT is not easy, but SDL seems to have done some in-depth research.

Kind regards

Christine

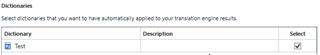

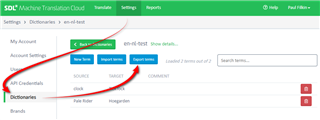

We just released an updated version of the plugin that supports the use of available dictionaries in your account:

https://appstore.sdl.com/language/app/sdl-machine-translation-cloud/941/

These are not Language Cloud Dictionaries, these are the dictionaries you can load to your SDL Machine Translation Cloud account and they are used server side to present you with the adapted NMT results.

I hope that the terminology option of NMT is more refined - tackling the terminology challenge in NMT is not easy, but SDL seems to have done some in-depth research.

I'm not sure this solution offers anything more sophisticated yet. While we were developing the solution in the plugin I asked a similar question and this was the response:

The first phase of the dictionary matching is done when we detect the matches for each segment and generate the dictionary constraints for the decoder to use. I.e. when processing each segment, we check if there are any dictionary matches for it, and assuming there are, we generate the matched dictionary constraints that define what sections of the segment to replace with what dictionary text. For BCM (Bilingual Content Model) input, it’s a straightforward process since this information is represented in the document and no searching is needed besides converting it to the expected dictionary constraints format used internally by the decoder. In the next phase, the decoder then processes the segment taking the constraints into account.

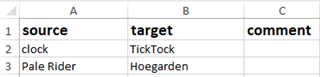

So they take a dictionary with 1:2:1 mappings source to target (no synonyms). So good maintenance on the "termbase" is essential to ensure good results.

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

Hi Paul,

thanks!

So like in good old RBMT times, concept-oriented multilingual termbases cannot be used "as is" for language-pair specific, term-oriented MT dictionaries.

I have found the Glossary Converter and especially its Multi-Line Format / Repeat Source term option very helpful when preparing RBMT dictionaries from termbases, then do additonal checks and clean-ups in MS Excel.

SMT and NMT dictionaries need even "cleaner" entries, see https://docs.sdl.com/LiveContent/content/en-US/SDL%20BeGlobal%20Online%20Help-v1/GUID-C0E595E6-28F5-4C59-8BBB-CAF8620FB4FA#docid=GUID-9F42DFFB-4E0C-4FA0-BAB5-4531EDBFC636&filename=GUID-06759C71-53ED-41F9-93AA-483860BE8FA2.xml&query=&scope=&tid=&resource=&inner_id=&addHistory=true&toc=false&eventType=lcContent.loadDocGUID-9F42DFFB-4E0C-4FA0-BAB5-4531EDBFC636

Would be good to have an app for supporting such termbase - MT dictionary conversion steps ;-)

Kind regards

Christine

Indeed... and the Glossary Converter is still useful today. The import feature for these dictionaries is based on Excel. If you go here and export an Excel after adding a couple of terms then you can see what format is required:

Basically it's a simple source/target/(optional)comment:

The rules you mention are also important to follow for the best results.

Would be good to have an app for supporting such termbase - MT dictionary conversion steps ;-)

This is an interesting idea. How do you see this working? I'm imagining a wizard that takes you through several steps:

As I think about this the rules do raise a lot of questions in my head, especially around the use of separate entries for gender based translations. For example, how will the NMT engine decide whether to use Lehrer or Lehrerin as the translation for teacher with only a simple substitution mechanism in place. If you have questions on all of this too, and I imagine you do, post them here and I'll get a comprehensive answer that should be helpful in working with this solution.

Paul Filkin | RWS

Design your own training!

You've done the courses and still need to go a little further, or still not clear?

Tell us what you need in our Community Solutions Hub

Hi Paul and Christine Bruckner,

From a linguistic (and subscription) point of view this is developing beyond my level of understanding, but from what I remember learning in uni about neural networks (https://mitpress.mit.edu/books/parallel-distributed-processing-volume-1) it seems to me that a NMT approach to translation might generally not harmonize with RBMT-style glossaries. The main benefit of neural networks, as I remember, is that they work on the sub-symbolic level, and that they have a "natural" way of learning, i.e. adjusting the weights on the individual "neurons". The trade-off is their opacity.

Working with ModernMT I notice that while translating it will (to some extent) adapt to my use of terminology. So from segment n to segment n+1, the my terminology will in some cases be taken on board. "To some extent" and "in some cases" because this is how a neural network functions.

(When I used the SMT LC with a termbase, I used it as a glorified TermInjector: Product listings where both source and target terms were in the nominative singular and not in a sentence structure, e.g. products containing product components with measurements, prices etc. I was quite happy with that.)

So rather than to impose explicit rules ("teacher"->"Lehrer") on a system that works with implicit rules, why not focus on customization through bilingual training corpora, and maybe allow the user temporarily to change the "learning factor" for this purpose. (Enable normal learning through feedback, but heighten the learning factor either for designated projects or for segments containing specific "trigger" terms.)

This would be a way of overcoming e.g. the gender problem, since NMTs are usually quite good at getting the gender right if there is a cue like a gender-specific pronoun.

If there is a need for a glossary, why not build on the TermInjector model, where the user can define rules and replacement terms based on the TM (MT it would be in this case) output.

For what it's worth.

Daniel

Hi Paul, hi Daniel,

The main benefit of neural networks, as I remember, is that they work on the sub-symbolic level,

State of the art is, acc. to my knowledge, a mix of sub-symbolic level (character level or byte-pair encoding) and word level where needed (e. g. for anonymization, but also tackling the terminology challenges).

Working with ModernMT I notice that while translating it will (to some extent) adapt to my use of terminology. So from segment n to segment n+1, the my terminology will in some cases be taken on board.

I think this is a slightly different scenario as the MT learns from your translation, not only terminology, but also style etc, so this is customization of NMT in a more general term. And there are quite some strategies for customization, for example by producing synthetic sentences in order to make the NMT learn the correct solution.

And I think we have been discussing "insert termbase terms in NMT suggestion", so about integrating and re-using validated terminology from a "multi-purpose" termbase ;-) And this is still one of the remaining challenges in NMT as many researchers admit.

In my opinion this is a chicken-egg problem:

From my times of converting termbases to RBMT dictionaries I remember that I have been preaching that gender and POS information should be added as mandatory fields to the termbase: It takes a terminologist only a little time to fill them, but they are vital for an MT dictionary. The same applies to proper definitions, context sentences, information about subject field and other rather ontological information which might help to disambiguate a term / word in context. Such explicit human-coded information is also or even more relevant - in times of data-driven approaches, like NMT.

On the other hand, there is a lot of research around context-sensitive word embeddings for NMT (BERT etc.) which might be applied or combined with the "rich" termbase exports.

Otherwise, you would need to hire lots of students to perform the termbase-MT dictionary conversion steps and manual additions

Kind regards

Christine