I would like to ask the community to weigh in on the XLIFF standard. It occurs to me that the development of the bilingual XLIFF files we often see in WordPress exports are not at all optimized for Translation Memory workflows. A couple of issues (let's assume all examples define English as the source language):

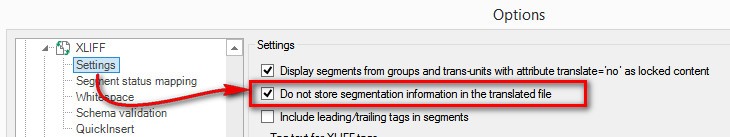

- XLIFF files by its bilingual nature are pre-segmented by each instance of <source> and <target> content. I understand the practical limitation of Studio's full stop segmentation rules because of the bilingual nature of the file. I've read elsewhere on SDL's community page that users report very long paragraphs extracted. This is our experience as well. This makes working with segmented Translation Memories impossible. The only resolution here seems to be to manually split segments which would be a cumbersome process.

- Although the XLIFF standard doesn't seem to have provisions for embedded content or CDATA support, the reality is that WordPress exports do generate HTML content and WordPress shortcodes. Studio's XLIFF filter does not allow processing embedded content. The workaround typically is to simply use a custom XML filter. This works great, but it does require you to process the <target> segments because XML filters replaces text. It does not map the source and target in Studio like it does with the XLIFF filter. Using the XML filter also takes care of segmentation.

- I understand that there are some standards around tag element conversion in the native XLIFF file that may help (have not tested it yet) but it doesn't resolve the segmentation issue.

- The XLIFF standard defines that <target> should always contain the latest translation. When the source content gets updated, we receive an updated <source> English and pre-populated old <target> translation. This wreaks total havoc with our XML filter because we lose the ability to process <target> to get the latest source. Processing the source is an option, but it replaces <source>. Manual workaround would be needed. There are several creative ways I could foresee. One options is to strip the source XLIFF file from the <target> entities, process the <source> segments using our XML filter, translate with the help of our TM and then export out to XLIFF. Now what we have is a XLIFF file with target translations in <source>. The manual workaround would be to replace <source> with <target> and </source> with </target>. Now the only thing you need to do is somehow replace the English <source> for each item. Maybe a compare of the files could work where you reject the deletion of the original source and accept the addition of the target. However, how cumbersome is that in case you deal with many files?

In conclusion: Right now the only productive process I can figure out is the XML route for original content as long as <target> is populated with English source content. However, updated files become a cumbersome process.

I've tried to research the history behind XLIFF a little bit but I can't necessarily determine whether Translation Memory workflow was ever considered in developing this standard. The bilingual nature of XLIFF may be very helpful to developers and certainly extends translation to a wider audience, but I feel that this standard could have a negative impact on translation quality by LSPs. XLIFF seems to take us away from having the Translation Memory (MT, TM, etc) as the central database for all translation management. Nowhere else in translation management do we typically deal with bilingual files because we manage translation and translation updates from the English source at all times.

I hope SDL can weigh in on this on best practices in working with XLIFF and any translation professional or LSP to weigh in on the impact it has on your workflow. I'm looking particularly for ideas that either remedy the problems I indicated with XLIFF or best practices on workarounds, including perhaps custom filters. I found one post that mentioned a filter that handles embedded content in XLIFF but the link is down. Even if that is resolved, I don't foresee that the segmentation issue realistically can be resolved in bilingual files.

Many thanks!

Jeroen Tetteroo

Language Solutions

St. Louis, MO

Using Studio 2017, 2014 Professional

Translate

Translate